That day I rage quit my project

It finally fell apart over a UI reskin.

I had an application that mostly worked. It was not polished, but it was coherent, testable, and good enough to keep moving. When I asked the AI to reskin it using my brand colours, what I expected to be a contained visual adjustment triggered a cascade of changes I neither asked for nor understood. Components failed, and behaviour broke in places that should not have been touched. Code that had been stable an hour earlier now behaved unpredictably or failed outright.

After several increasingly frustrated attempts to reverse the damage, I deleted the repository and started again.

That decision was less dramatic than it sounds. It was simply the moment I admitted that the problem was not the AI, but the way I had been working with it.

Why vibe coding feels so good at first

AI-assisted development often begins with a sense of acceleration. You describe the system you want, the model generates large volumes of code, and visible progress arrives quickly. For a while, that approach genuinely works.

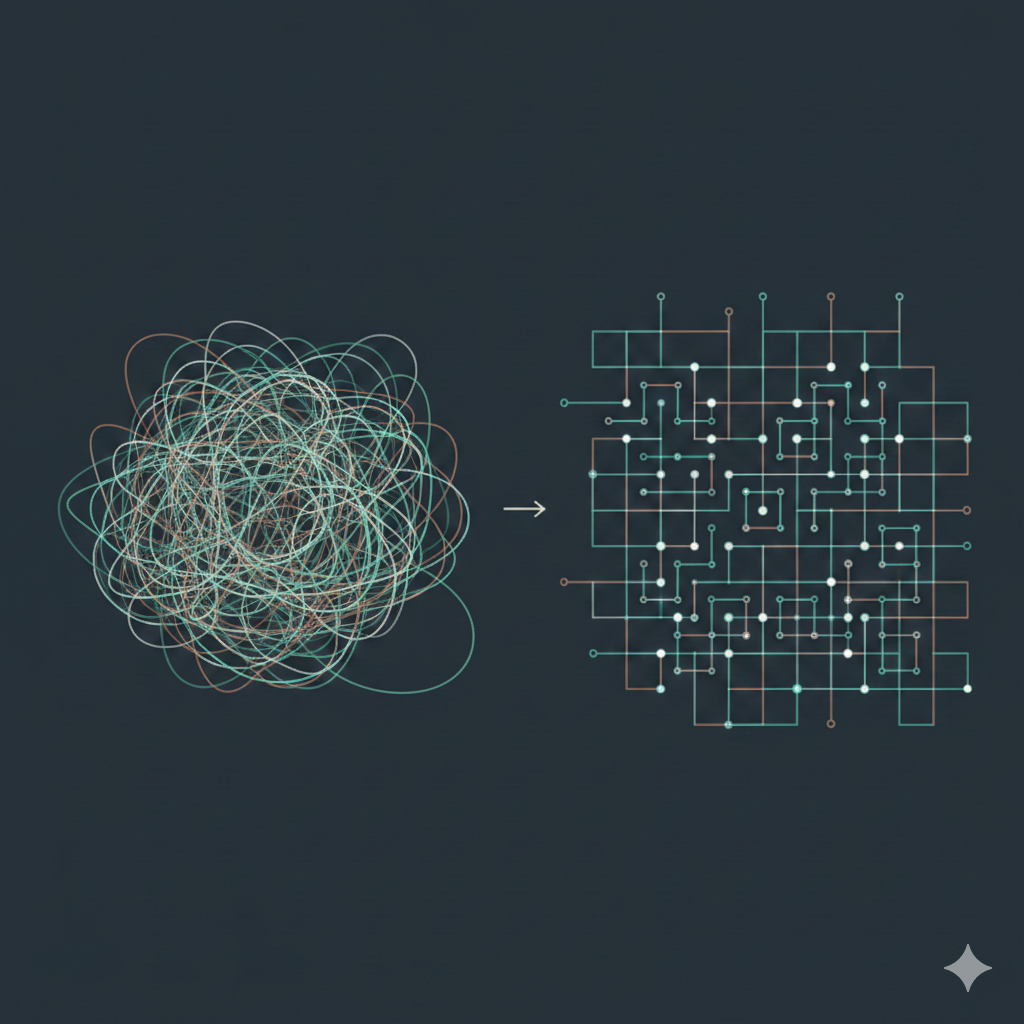

What it conceals is the gradual erosion of structure.

In my case, I was competent enough in Python to recognise when something looked reasonable, but far less fluent in React and TypeScript. The AI’s output was articulate, confident, and superficially consistent, which made it easy to accept decisions I would have questioned more rigorously in a familiar stack. Additional abstractions appeared. Logic spread sideways into files I had never intended to change. Nothing failed decisively, so I continued.

Eventually, a change that should have been trivial exposed how little I understood the system I now owned. At that point, diagnosing failures required far more effort than implementing the original feature ever should have demanded.

What actually went wrong

The mistake was allowing the AI to operate without meaningful constraints.

A coding model optimises for helpfulness. In practice, that means doing more than requested. It refactors while adding behaviour. It anticipates future requirements. It applies patterns that make sense locally without regard for the wider system. Reviewing the output afterwards provides limited protection, because the scope of change has already expanded beyond easy comprehension.

What was missing from my workflow was deliberate restriction. I needed the AI to operate within clearly defined limits, with explicit rules governing what it was allowed to modify and when.

Here is a refined version of that section, with more specificity, explicit mention of Gemini and Codex, and clear attribution to Angie Jones and Kent Beck, while staying within your constraints.

Two rules that stopped the chaos

When I restarted the project, I rebuilt my approach around two principles that now govern every interaction with the AI.

Rule one: make code review boring and strict again

The first principle is to treat code review as a maintainer activity, not a conversation. This idea is directly inspired by a post from Angie Jones on teaching GitHub Copilot to think like a maintainer, which I have linked here.

In practice, this meant separating roles across models. I use Codex to do the work inside the repository, writing tests and implementing changes in small steps. I then use Gemini purely as a reviewer, pointing it at the branch and asking it to behave like a cautious, opinionated maintainer. Gemini does not write code. It audits it.

The review rules are deliberately strict. The model is instructed to comment only when it has high confidence that a real issue exists. Every comment must be actionable, ideally with a concrete suggestion for how to fix the problem. Observations without a clear corrective path are suppressed. Style, formatting, and naming are explicitly ignored in favour of correctness, safety, and architectural integrity. The effect of this is immediate. Reviews become quieter, shorter, and far more useful. When Gemini flags something, it is almost always worth stopping and paying attention.

Rule two: never tidy and change behaviour at the same time

The second principle comes from Kent Beck and his Tidy First approach to development and code review, which he writes about here.

The core idea is to keep structural changes and behavioural changes separate, and never attempt both at the same time. Structural changes exist to make the code easier to read, reason about, and modify. Behavioural changes exist to add features or fix defects. When these are mixed, intent becomes unclear and risk increases, particularly when an AI is generating the changes.

I enforce this separation explicitly with the AI. Any structural tidying happens first and must leave behaviour unchanged. Only once the structure is stable do I allow behavioural changes. This discipline matters because AI models are very good at making multiple plausible changes at once, and very bad at signalling which of those changes actually mattered. By keeping tidying and behaviour separate, each step becomes smaller, clearer, and easier to validate. When a tidying task starts to feel complex or time-consuming, that is a reliable signal that the work has not been broken down far enough.

These two rules have allowed me to move more predicably within tight constraints. Gemini acts as a conservative maintainer who is difficult to impress. Kent Beck’s structural discipline keeps the surface area of change small. The result is not just cleaner code, but a workflow that stays understandable as the system grows.

What my workflow looks like now

I treat the work as a product, even when I am the sole user.

I maintain a backlog in Notion where each task captures intent and acceptance criteria rather than implementation detail. Alongside that sits a single Markdown document that functions as the project’s long-term memory. It defines scope, goals, architectural decisions, technology choices, testing approach, and unresolved questions. This document evolves slowly and deliberately.

When I start a task, I pull in the relevant backlog item and the project context. I ask the AI to propose the smallest possible test-driven steps required to implement the change. That plan is written down, reviewed, and adjusted before any code is written.

How I work with the AI in practice

The interaction loop is intentionally precise and deliberately paced.

The AI writes a test and stops. I review it. The AI implements the minimum logic required to satisfy the test and stops again. I review the change before allowing it to proceed. This pattern repeats until the task is complete.

This approach involves frequent pauses and frequent review. It also prevents the long, demoralising debugging sessions that emerge when errors are allowed to accumulate unnoticed. When I encounter code I do not understand, I ask why it exists. When I disagree with a solution, I redirect the AI immediately.

At regular intervals, I run an additional review using a different model, focused purely on structure and maintainability. Any findings are captured and addressed using the same small, explicit steps.

Managing context windows is part of the work. Writing decisions down is how I preserve intent over time.

Why this ends up being faster

Before adopting this approach, tasks that appeared simple routinely consumed an entire day. Progress was followed by regressions, which required fixes that introduced further issues.

With tighter boundaries and smaller steps, the same class of task now takes a few hours of steady, predictable progress. The AI has not become faster. The work has become more controlled. That control is what eliminates wasted effort.

The takeaway

Asking an AI to build an application invites complexity you will later have to untangle. Asking it to make one small, clearly defined change produces results you can trust.

Speed emerges from discipline. When you manage context deliberately, you shape the quality of the system you are building.

Let’s compare notes

This way of working is still evolving, and I am interested in how others are navigating similar constraints. How are you managing backlog and context when working with AI tools? Have you experimented with using one model to review the output of another?

If there is interest, I am happy to share the prompts and review rules I use to keep my AI operating in maintainer mode.

Pingback: 停止 vibe coding,在你刪除你的 repo 之前 - AI 資訊