Every tester has had that moment. You look for guidance on a current problem and end up with advice that feels twenty years old. You find articles on writing test cases in Excel, or long arguments about whether automated testing can ever replace manual testing. It is a little surreal.

It is easy to assume people are simply behind the times, but the real issue is structural. The internet remembers everything. And because it rarely forgets, older knowledge accumulates faster than new insight can replace it.

The content imbalance problem

Fresh ideas appear every day, but the older ones never really disappear. For every new post, paper, or talk, there are decades of material still circulating and still discoverable. The result is an enduring imbalance: the visible body of “old” knowledge always outweighs the “new.”

This effect is compounded by human habit. Many experienced professionals continue to teach what worked for them earlier in their careers. The intent is good, but the result is that practices from another era remain part of the mainstream conversation. Familiarity is persuasive, even when it no longer fits.

Seeing the imbalance

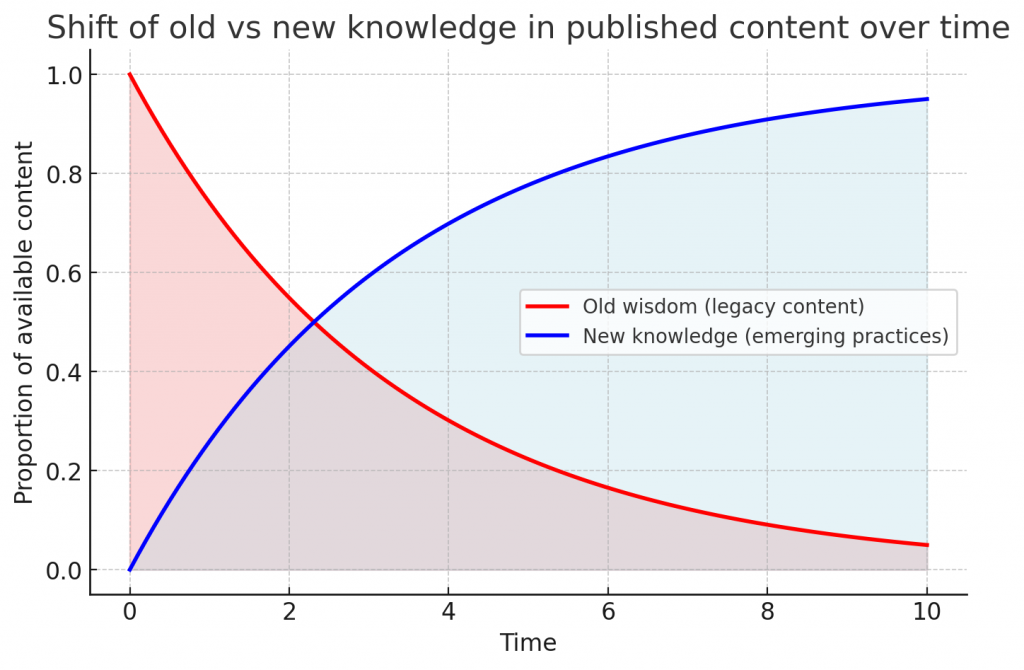

Picture a graph showing the proportion of old and new knowledge in online publications. At first, almost everything represents established wisdom. As new approaches emerge, the balance begins to shift, but the older content does not vanish. It lingers, gradually fading but never gone.

That is why the internet’s collective memory tends to lean toward the past. Old knowledge decays slowly, and new knowledge takes time to mature and spread.

The image below is AI generated, and funnily doesn’t really show what it says it shows. Point in question, I think.

How AI amplifies the effect

The recent surge of AI tools has made this imbalance even more visible. Most large language models are trained on vast amounts of public internet data, much of which reflects the same outdated guidance that dominates search results. When these systems generate explanations or examples, they often reproduce patterns from earlier eras of software development.

This creates a feedback loop: old advice becomes even more entrenched because it is repeated, reformatted, and re-circulated by automated systems that were trained on it. Unless the underlying training data shifts toward more recent, evidence-based sources, AI will continue to amplify the lag between how we work and how we talk about work.

Why this matters in testing

In software testing, this imbalance has real consequences. New practitioners often learn from what is easiest to find rather than what is most current. They repeat patterns that were once best practice under very different delivery models.

It also drives ongoing confusion about language. Terms such as QA, QE, SDET, and tester are used interchangeably, even though each describes a distinct perspective. The result is misalignment and unproductive debate. Teams believe they disagree on principles when, in fact, they are speaking from different points in history.

The problem is not unique to testing, but it is more visible here because the discipline has evolved so rapidly. Agile, DevOps, and AI have each changed how we define quality and how we deliver it, yet much of the guidance still reflects an earlier, slower world.

Updating our collective wisdom

Shifting the balance requires us to treat learning as a continuous act, not a one-time achievement. It means reviewing the materials we share with others and asking whether they still reflect how we actually work. It means refreshing onboarding guides, rethinking conference talks, and replacing outdated internal playbooks.

We do not need to erase the past. Some of that older knowledge remains valuable. But we do need to keep layering new understanding on top until it becomes what people encounter first.

Closing thought

The reason so much testing content feels stuck in the past is not a lack of curiosity or capability. It is the persistence of information. Our collective library never resets, and every new idea has to make itself heard over the echo of everything that came before it.

Our task is not to lament that imbalance but to keep contributing newer, clearer insights until they define the conversation.