You know the feature inside out. You have tested it, broken it, rebuilt the test suite around it twice. So you ask the AI to generate a few test cases. Save yourself twenty minutes.

What comes back looks reasonable. The structure is right. The naming conventions are close enough. Then you start reading.

The first test doesn’t check what it claims to check. The second one tests a condition that can never fail. The third repeats the second with different variable names. By the fourth, you are rewriting from scratch, and the twenty minutes you were saving have become forty minutes of cleanup.

You are not alone in this. I ran a survey last month asking testers about their biggest AI frustrations. 65% described exactly this pattern. One respondent put it perfectly:

“It turns into an exercise of ‘no wait, not what I meant, let me explain’ on repeat.”

Another called the output “slop.” As in, Merriam-Webster’s 2025 word of the year. That word resonated because it names something specific. Not wrong, exactly. Not right either. Just… plausible enough to waste your time.

The job you didn’t apply for

Here is what I keep hearing from testers who are deep in this cycle. The work has changed, but nobody updated the job description.

You used to spend your days thinking about risk, about where the system could break, about what mattered to the people using the software. Now a growing chunk of your week goes to reading AI output, deciding what to keep, fixing what is almost right, and throwing away what is not.

“The tools still just do whatever they please and need a LOT of effort to keep them on course, keeping them from straying too far out into the wood. It’s exhausting.”

That word keeps coming up. Exhausting. Not because the work is hard in the way testing has always been hard. Because it is a new kind of hard. You are spending cognitive energy managing a tool instead of doing the thing you are good at.

And the frustrating part is that the tool works just often enough to keep you using it. You get one good result out of five, and that is enough to make you try again tomorrow. The promise of speed is always one prompt away.

Meanwhile, upstairs

While you are sifting through AI output, your manager is reading a different story. They saw the demos. They read the case studies from vendors who sell the tools. They heard that AI makes teams twice as productive.

“Pressure to deliver has increased dramatically because management thinks we must be twice as productive now because we are using AI.”

That survey response came from someone caught between two realities. The reality at their desk, where AI output needs constant babysitting. And the reality in the boardroom, where AI has already delivered its promised gains on a slide deck somewhere.

This is the squeeze that nobody talks about at conferences. The gap between what AI tools actually do and what your organisation believes they do. You are the person standing in that gap, expected to make both sides true.

What is actually going wrong

When I look at the survey responses together, a pattern shows up. The testers who are most frustrated are not using bad tools. Many of them are using the same tools as the people getting decent results. The difference is not the AI.

The difference is what happens before the AI gets involved.

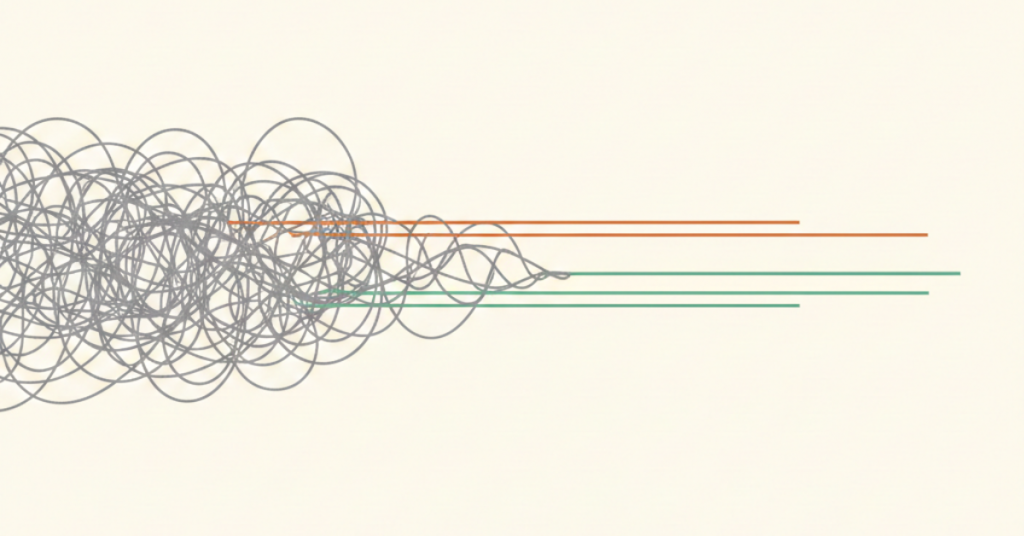

AI amplifies whatever approach you bring to it. If you have a clear sense of what you are testing, why it matters, and what a good result looks like, the AI has something to work with. It can fill in gaps and speed up the boring parts. If you do not have that clarity, the AI will produce more of whatever you gave it. Vague input, vague output. At scale. Fast.

This is why “slop” is such a precise word for the problem. The AI is not failing. It is succeeding at the wrong thing, because nobody told it what the right thing was.

The testers I have worked with who get consistent results from AI tools are not better at prompting. They have a framework for deciding what matters before they open the tool. They know which testing problems are worth handing to AI and which ones are not. They spend five minutes setting up the right question and save an hour on the answer.

That is the part most AI training skips. The courses teach you how to use the tool. They do not teach you how to think about the problem first. And thinking about the problem first is the whole game.

What happens next

On Wednesday I am going to walk through exactly what that looks like in practice. I will show you how testers are using a structured approach to cut through the noise and get output they can actually trust.

If you grabbed the 5 AI Testing Experiments guide last week, you have already seen the starting point. If you have not, you can download it here. It takes about 15 minutes to read and gives you five focused experiments to run against your own workflow.

Either way, I would like to hear from you. Hit reply and tell me: what is your version of the correction loop? What does your “no wait, not what I meant” moment look like? I read every reply.